How Does Duplicate Content Impact SEO?

Every website owner wants their content to rank high on search engine results pages (SERPs) and attract visitors to their site. However, one of the biggest challenges they face is creating original and unique content that ranks on search engines.

Duplicate content is one of the most common issues that take place when optimizing for search engines. If your website has multiple pages containing identical or similar content, this can also negatively impact your SEO efforts.

In this post, we’ll explore how duplicate content affects your website’s SEO and what you can do to avoid it.

Ogilvy MarTech is a marketing technology solution provider based in Sri Lanka. We are an award-winning web design and development company with over 10 years of experience. We specialize in Technical SEO, SEO consulting, SEO Audits, and more. If you're seeking SEO services, get in touch with our team of SEO specialists.

Table of contents

What is Duplicate Content?

Duplicate content refers to content that appears on multiple pages across your website, or that is copied from other websites. Search engine crawlers compare all pages on the web to identify which content is the most relevant to user searches.

Duplicate content makes it difficult for crawlers to understand which version of the content to prioritize for indexing. This leads to lower rankings for your pages and reduced visibility to potential visitors.

Why Is Duplicate Content Harmful to SEO?

Contrary to popular belief, duplicate content is not as bad as it seems. If you're not careful, though, it can harm your SEO. The absence of organic traffic and ranking, as well as the potential loss of SERP presence, are the most frequent negative effects duplicate content has on SEO.

When search engines find duplicated content across multiple pages or websites, they may label your content as spammy, deceptive, or non-original. This is harmful to your search engine rankings because search engines prioritize original, high-quality content. In extreme circumstances, using duplicate material to deceive search engines or spam might result in your website being de-indexed or penalized.

Duplicate content can also lead to diluted page authority because search engines split link equity among multiple pages. Therefore, if you duplicate your content, each copy of that content will only receive a fraction of the link equity intended for the original content.

Before moving on to the solutions, read about how to find duplicate content.

How to Identify Duplicate Content?

There are several ways to check if your content is duplicated. One of the most effective ways is to use plagiarism detection tools like Copyscape and Scrapebox, which can compare your content against the entire web to identify identical or similar content.

01. Copyscape

Copyscape will scan your website and compare it to more than 2 billion other websites. This tool has the drawback of being slower than some others, but it is also more thorough (and free).

Once you've identified the copies, you can use this tool to take appropriate actions, such as putting a disclaimer or getting in touch with the site owners to ask them to make any necessary changes to their copycat content.

02. Scrapebox

Scrapebox searches web pages extremely quickly and has a user-friendly interface. If you only want to scan specific pages on your website or an entire domain, you can also input a URL.

After analyzing them, it provides you with a list of duplicate domains and pages on your website so you can choose which one to utilize.

03. Google Search Console

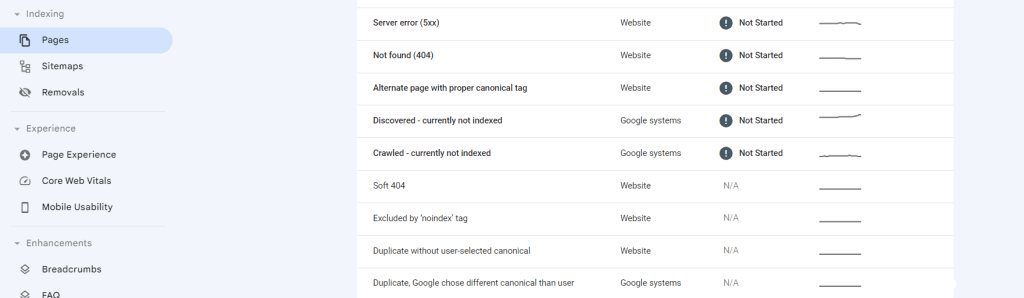

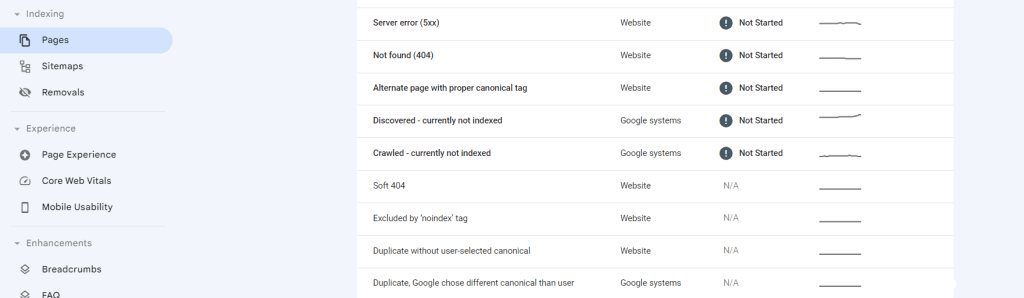

You can also use Google’s Search Console to identify duplicate content issues on your website. Go to GSC > Choose your account > Pages > Page indexing issues report to identify pages with duplicate title tags, meta descriptions, or content.

How to Avoid Duplicate Content

There are several ways to avoid duplicate content. Here are a few effective solutions:

01. Create Unique Content

The best way to avoid duplicate content is to produce unique, original content. Write original content that’s relevant to your target audience and use keyword research to optimize it for search engines.

You can create a strategy to minimize duplicate content by organizing your information into topic clusters.

02. Use Canonical URLs

Canonical URLs tell search engines which version of your content is the preferred version.

A piece of HTML code called the rel=canonical element informs Google that the publisher owns a piece of material, even if it can be accessed elsewhere. These tags let Google know which version of a page is the 'main version.'

The canonical tag can be applied to numerous location-targeting pages - for example, mobile and desktop page versions, print vs. web content versions, and mobile and desktop page versions. It can also be applied to any other situations in which there are duplicate pages originating from the primary version page.

Moreover, Canonical tags come in two varieties: ones that point to a page and ones that point away from it. This way, search engines can know the “master version” and index that page.

The other type is self-referential canonical tags, which identify themselves as the master version. Self-referencing canonicals are good practice, and referring canonicals is a crucial aspect of identifying and removing duplicate information.

03. Meta Tags

Meta robots and the signals you are currently providing to search engines from your pages are other helpful technological elements to consider when assessing the likelihood of similar content on your site.

If you wish to prevent a certain page, or pages, from being indexed by Google and would rather they not appear in search results, meta robots tags can be helpful.

You can effectively inform Google that you do not want the page to appear on SERPs by adding the 'no index' meta robots tag to the HTML code of the page. This approach is preferred over Robots.txt blocking. This is because it allows for more precise blocking of a specific page or file, whereas Robots.txt is typically a bigger-scale project.

Although there are numerous reasons to give this instruction, Google will grasp it and should remove the duplicate pages from SERPs.

04. Duplicate URLs

On a website, duplicate content can be caused by a number of structural URL components. A different URL will always point to a different page if no other instructions or directives are present.

The most frequent ways that duplicate URLs might appear are in HTTP and HTTPS versions of pages, www. and non-www – URLs, and pages with and without following slashes. So, ensure that all your URLs are unique, within 80 characters and are HTTPS versions with canonicals.

05. Use 301 Redirects

When you have multiple pages with identical content, use 301 redirects to point the search engine to the preferred page.

Redirects are a great way to get rid of duplicate content. Pages that have been copied from another page can be redirected back to the original version.

Redirects could be a good solution to deal with duplicate pages on your site that receive heavy traffic or have valuable links coming from other pages.

There are two key points to keep in mind when using redirects to eliminate duplicate content: always redirect to the higher-performing page to minimize the impact on the performance of your site, and, if possible, use 301 redirects.

To prevent the risk of duplication, you must decide which version to use between www. and non-www. Additionally, redirects should be set up to point to the version of the page that should be indexed and eliminate the possibility of duplication, such as mysite.com > www.mysite.com.

Take note that HTTP URLs pose a security risk and the HTTPS version of the page uses encryption (SSL), which makes it secure.

Dive Deep: The Ultimate On-Page SEO Checklist To Rank On SERP

Conclusion

Duplicate content issues can severely impact your website’s SEO and overall health. Therefore, it’s important to take measures to identify, fix, and prevent duplicate content issues on your website.

By creating unique and relevant content, implementing canonical URLs and 301 redirects, and repurposing content, you can avoid duplicate content issues and enjoy the many benefits of a well-optimized website.

Now that you understand how duplicate content affects your SEO, you can take proactive steps to avoid it and watch your website climb up the search engine rankings.

Read Next: Top Best Practices To Improve User Experience In SEO (2023)